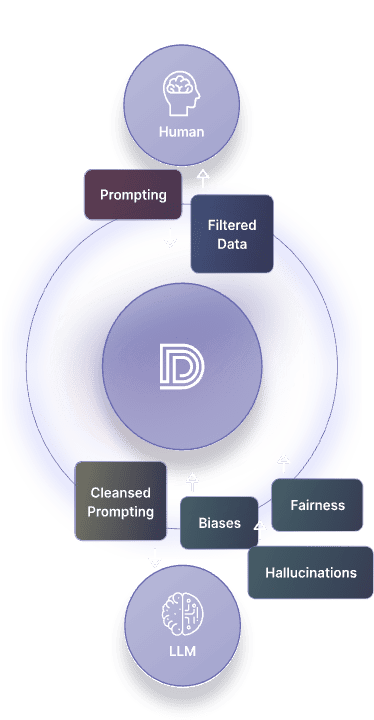

DeepKeep is an advanced AI tool specifically designed to enhance security and trustworthiness within AI applications. It stands out in the tech landscape as the only generative AI-built platform that not only identifies both seen and unseen vulnerabilities across the AI lifecycle but also offers automated security and trust remedies. This makes DeepKeep an essential asset for businesses relying heavily on AI, GenAI, and LLM technologies to safeguard their operations against a range of cyber threats and compliance risks. Safeguarding artificial intelligence with AI-native security and trustworthiness.

Deepkeep’s solution for LLM

Security and trustworthiness are critical not only when using an external LLM (like ChatGPT, Claude, or Gemini) for content generation, summaries, translations, etc., but even more so when using internal fine-tuned LLM.

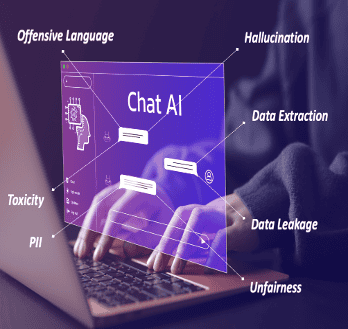

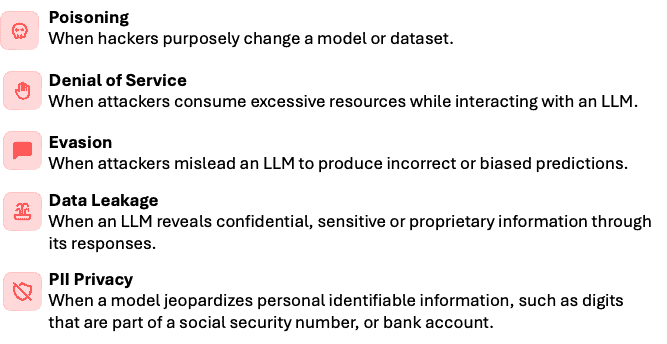

- Protect against LLM attacks, including prompt injection, adversarial manipulation and semantic attacks

- Identify and alert against hallucination using a hierarchical system of data sources, including both internal and trusted external references

- Safeguard against data leakage, protecting sensitive data and personally identifiable information (PII)

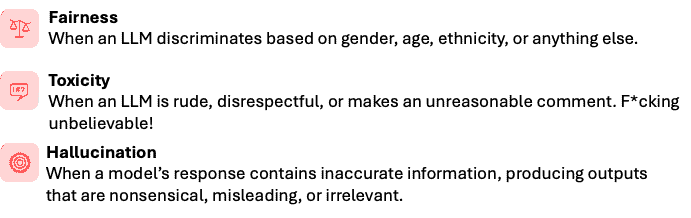

- Detect and removes toxic, offensive, harmful, unfair, unethical, or discriminatory language

Security

Securing AI models from the development phase of machine learning models through the entire product lifecycle, including risk assessment, protection, monitoring and mitigation.

Trustworthiness

Valid, reliable, resilient, accountable, fair, transparent and explainable.

LLMs Lie And Make Mistakes. The Question Is Not If, But When And How